Semantic-Based Sentence Recognition in Images Using Bimodal Deep Learning

Abstract

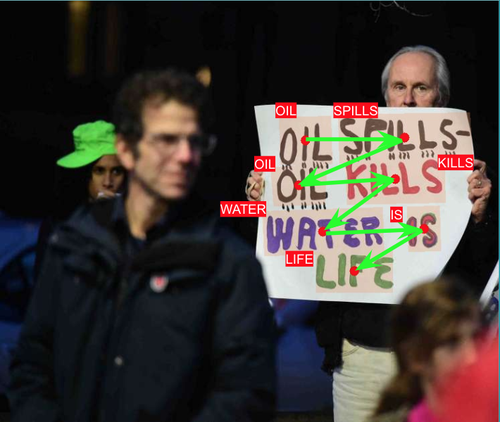

The accuracy of computer vision systems that understand sentences in images with text can be improved when semantic information about the text is utilized. Nonetheless, the semantic coherence within a region of text in natural or document images is typically ignored by state-of-the-art systems, which identify isolated words or interpret text word by word. However, when analyzed together, seemingly isolated words may be easier to recognize. On this basis, we propose a novel “Semantic-based Sentence Recognition” (SSR) deep learning model that reads text in images with the help of understanding context. SSR consists of a Word Ordering and Grouping Algorithm (WOGA) to find sentences in images and a Sequence-to-Sequence Recognition Correction (SSRC) model to extract semantic information in these sentences to improve their recognition. We present experiments with three notably distinct datasets, two of which we created ourselves. They respectively contain scanned catalog images of interior designs and photographs of protesters with hand-written signs. Our results show that SSR statistically significantly outperforms baseline methods that use state-of-the-art single-word-recognition techniques. By successfully combining both computer vision and natural language processing methodologies, we reveal the important opportunity that bi-modal deep learning can provide in addressing a task which was previously considered a single-modality computer vision task.

Published at: IEEE International Conference on Image Processing (ICIP), Anchorage, Alaska, USA, 2021.

Bibtex

@article{Zheng2021SemanticBasedSR,

title={Semantic-Based Sentence Recognition in Images Using Bimodal Deep Learning},

author={Y. Zheng and Qitong Wang and Margrit Betke},

journal={2021 IEEE International Conference on Image Processing (ICIP)},

year={2021},

pages={2753-2757},

url={https://api.semanticscholar.org/CorpusID:238082348}

}